Virtual clusters deliver instant self-service environments to developers, with zero touch operations while ensuring operational control, efficient resource usage and compliance with best practices for the central platform teams.

Virtualization in general is a way to apply software-defined segmentation, isolation, and management to virtual representations of physical resources, whether it is storage or machines or clusters. In addition to other benefits, virtualization allows developers to safely self-serve. Many new technologies have followed a similar trajectory — think about servers and virtual machines. Now as more organizations adopt Kubernetes and start to struggle with best practice enforcement as well as the management and resource utilization problems related to cluster sprawl, they are starting to apply the same virtualization techniques to clusters.

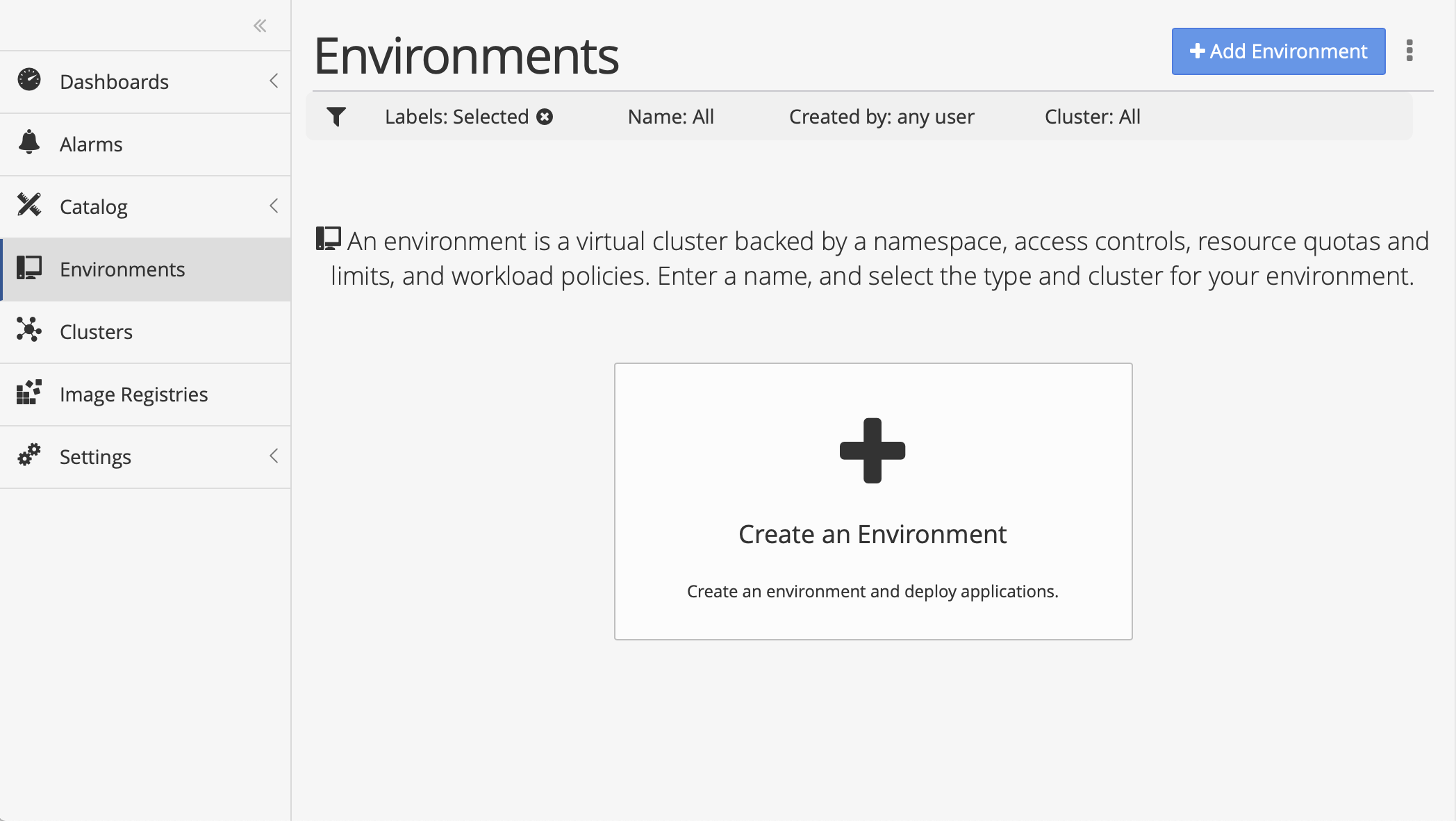

How virtual clusters work

Virtual clusters improve resource utilization by packing multiple virtual clusters onto a single physical cluster. While virtual clusters are often used interchangeably with namespaces, the two concepts are not identical. Namespaces are the foundation for virtual clusters, but virtual clusters allow (and require) each cluster to be configured separately from each other, allowing access controls, security policies, network policies and resource limits to be configured differently for each virtual cluster. The advantage of virtual clusters is that for users, they mostly appear and behave just like physical clusters.

Using virtual clusters, however, can also make it easier to ensure that organizational governance policies are uniformly enforced throughout the organization. Adding the virtualization layer makes it easier to use automation tools, including a policy engine like Kyverno, to audit, mutate and create policies and ensure that best practices in terms of security settings, resource utilization and monitoring are set up appropriately.

Virtualizing the Kubernetes Data Plane

Here is the definition of a Kubernetes namespace:

Kubernetes supports multiple virtual clusters backed by the same physical cluster. These virtual clusters are called namespaces.

The Kubernetes objects that need to be properly configured for each namespace are shown and discussed below:

Access Controls: Kubernetes access controls allow granular permission sets to be mapped to users and teams. This is essential for sharing clusters, and ideally is integrated with a central system for managing users, groups and roles.

Workload Policies: Using Nirmata’s open-source policy engine Kyvernoallows administrators to configure exactly how kubernetes workloads should behave. This provides platform team tremendous flexoibility to validate configuration, mutate resources to be in compliance and geenrate resoource on-demand, ensuring complete automation of on-demand virtual cluster policy management.

Network Policies: Network policies are Kubernetes firewall rules that allow control over inbound and outbound traffic from pods. By default, Kubernetes allows all pods within a cluster to communicate with each other. This is obviously undesirable in a shared cluster, and hence it is important to configure default network policies for each namespace and then allow users to add firewall rules for their applications.

Limits and quotas: Kubernetes allows granular configurations of resources. For example, each pod can specify how much CPU and memory it requires. It is also possible to limit the total usage for a workload and for a namespace. This is required in shared environments, to prevent a workload from eating up a majority of the resources and starving other workloads.

Automated add-ons - Nirmata provides flexible approaches to provides add-ons as self-service resources, e.g. ingress, logging, monitoring, backups etc. for the developers that can be centrally configured and managed.

Flexible access - Whether developers like CLI or UI, Nirmata can deliver virtual clusters on-demand.